Continuous Locomotive Crowd Behavior Generation

Inhwan Bae, Junoh Lee and Hae-Gon Jeon*

Computer Vision and Pattern Recognition 2025

Abstract

Modeling and reproducing crowd behaviors are important in various domains including psychology, robotics, transport engineering and virtual environments. Conventional methods have focused on synthesizing momentary scenes, which have difficulty in replicating the continuous nature of real-world crowds. In this paper, we introduce a novel method for automatically generating continuous, realistic crowd trajectories with heterogeneous behaviors and interactions among individuals. We first design a crowd emitter model. To do this, we obtain spatial layouts from single input images, including a segmentation map, appearance map, population density map and population probability, prior to crowd generation. The emitter then continually places individuals on the timeline by assigning independent behavior characteristics such as agents' type, pace, and start/end positions using diffusion models. Next, our crowd simulator produces their long-term locomotions. To simulate diverse actions, it can augment their behaviors based on a Markov chain. As a result, our overall framework populates the scenes with heterogeneous crowd behaviors by alternating between the proposed emitter and simulator. Note that all the components in the proposed framework are user-controllable. Lastly, we propose a benchmark protocol to evaluate the realism and quality of the generated crowds in terms of the scene-level population dynamics and the individual-level trajectory accuracy. We demonstrate that our approach effectively models diverse crowd behavior patterns and generalizes well across different geographical environments.

Summary: A crowd emitter diffusion model and a state-switching crowd simulator for populating input scene images and generating lifelong crowd trajectories.

Presentation Video

🏢🚶♂️ Crowd Behavior Generation Benchmark 🏃♀️🏠

The goal of the Crowd Behavior Generation task is to automatically produce continuous and realistic crowd trajectories with heterogeneous behaviors and interactions in space from single input images. Unlike existing crowd simulation and trajectory prediction tasks, which fundamentally rely on initial observed states, the Crowd Behavior Generation task completely eliminates this dependency by continuously populating environments.

Motivation

Performance improvements in traditional trajectory prediction tasks have reached their limit. Moreover, the reliance on historical conditions in trajectory prediction imposes overly strict constraints, restricting its applicability in diverse scenarios such as synthetic data generation for autonomous vehicles, crowd simulations in urban planning, and visual cinematography involving pedestrian synthesis for movie backgrounds.

Therefore, we propose a paradigm shift from conventional prediction tasks toward more versatile generation tasks.

Features

- Repurposed Trajectory Datasets: A new benchmark that reuses existing real-world human trajectory datasets, adapting them for crowd trajectory generation.

- Image-Only Input: Eliminates conventional observation trajectory dependency and requires only a single input image to fully populate the scene with crowds.

- Lifelong Simulation: Generates continuous trajectories where people dynamically enter and exit the scene, replicating ever-changing real-world crowd dynamics.

- Two-Tier Evaluation: Assesses performance on both scene-level realism (e.g., density, frequency, coverage, and population metrics) and agent-level accuracy (e.g., kinematics, DTW, diversity, and collision rate).

🚵 CrowdES Framework 🚵

We present a method for generating realistic, continuous crowd behaviors from input scene images by alternating between a crowd emitter and a crowd simulator. The crowd emitter continuously populates the scene with individuals characterized by various attributes, while the crowd simulator generates locomotion trajectories for each agent's starting points to destinations.

- Crowd Emitter: A diffusion-based model that iteratively “emits” new agents by sampling when and where they appear on spatial layouts.

- Crowd Simulator: A state-switching system that generates continuous trajectories with agents dynamically switching behavior modes.

- Controllability & Flexibility: Users can override or customize scene-level and agent-level parameters at runtime.

- Sim2Real & Real2Sim Capability: The framework can bridge synthetic and real-world scenarios for interdisciplinary research.

🚀 Generation Results 🚀

Time-Varying Behavior Changes

CrowdES generates continuous, natural actions with smooth behavioral transitions over time, compared to algorithm-based shortest paths and diffusion-based noisy paths.

A video tracking an individual throughout a 10-minute scenario. CrowdES generates diverse and socially acceptable behaviors using the state-switching crowd simulator.

Flexibility

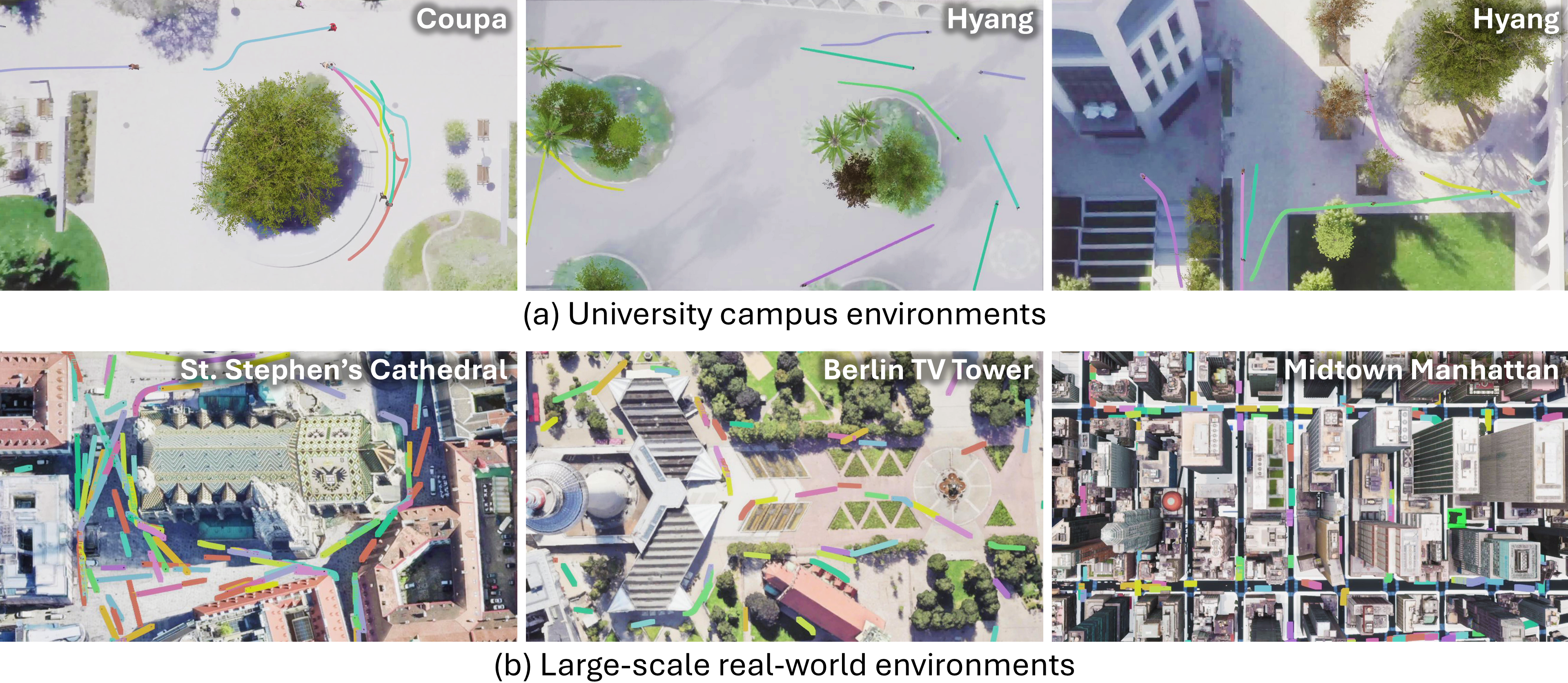

CrowdES flexibly populates environments and simulates realistic crowd behaviors within large-scale, complex New York City environments covering a 2 km × 1 km area.

Controllability

CrowdES offers controllability for various applications. Users can adjust scene-level and agent-level parameters from an initial uncontrolled scenario simultaneously.

Sim2Real & Real2Sim

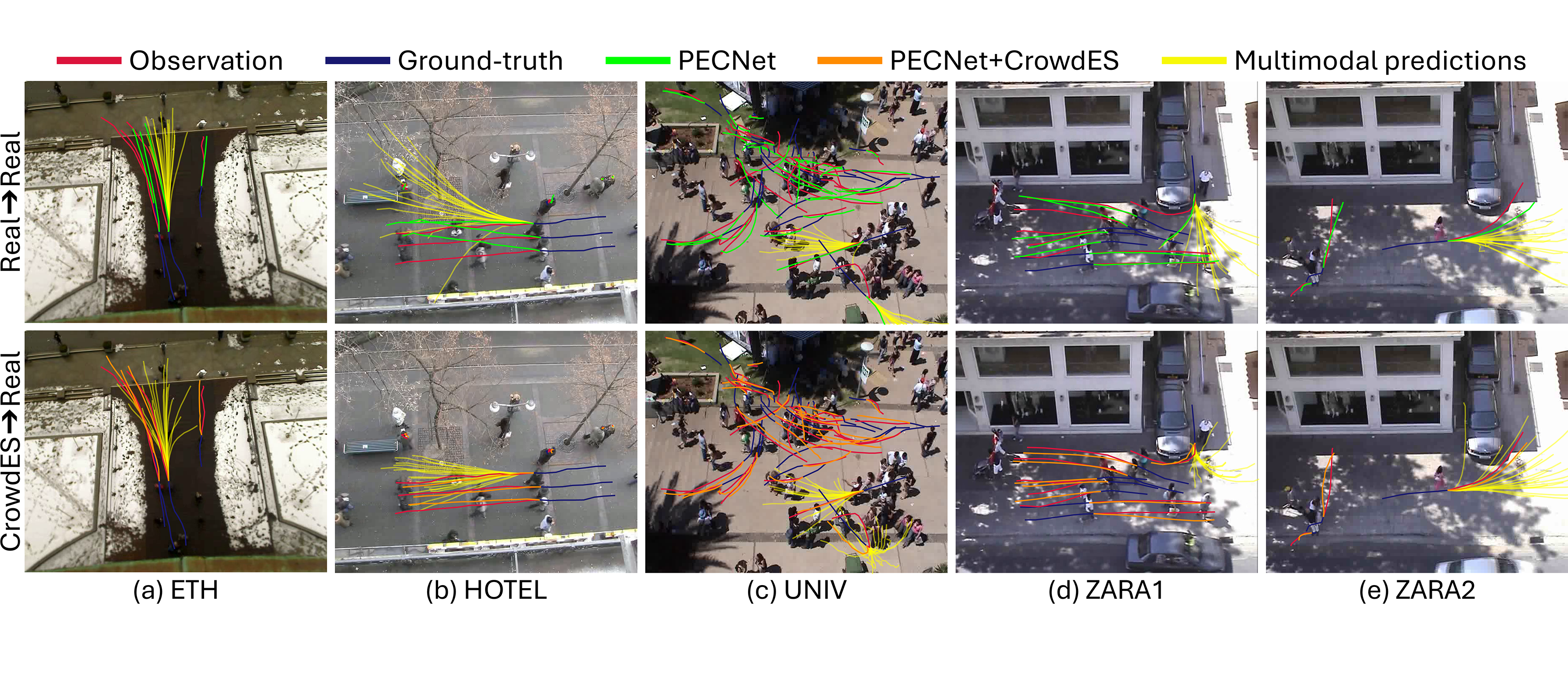

Sim2Real Transfer

We train an off-the-shelf trajectory predictor on synthetic data from our CrowdES and evaluate it on real-world data. Compared to the top-row model trained on real data, the bottom model, trained on synthetic data, captures more diverse motions and accurately predicts future trajectories.

Real2Sim Transfer

CrowdES generates virtual crowds naturally emerging from buildings or scene boundaries and moving toward their destinations. During their locomotion toward destinations, crowds avoid collisions with environmental and dynamic obstacles like trees, bushes, and other agents.

Generated vs. Real-World Behaviors

Scene-Level Behaviors

Agent-Level Behaviors

BibTeX

@inproceedings{bae2025crowdes,

title={Continuous Locomotive Crowd Behavior Generation},

author={Bae, Inhwan and Lee, Junoh and Jeon, Hae-Gon},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

year={2025}

}