A Set of Control Points Conditioned Pedestrian Trajectory Prediction

Inhwan Bae and Hae-Gon Jeon*

Association for the Advancement of Artificial Intelligence 2023

Abstract

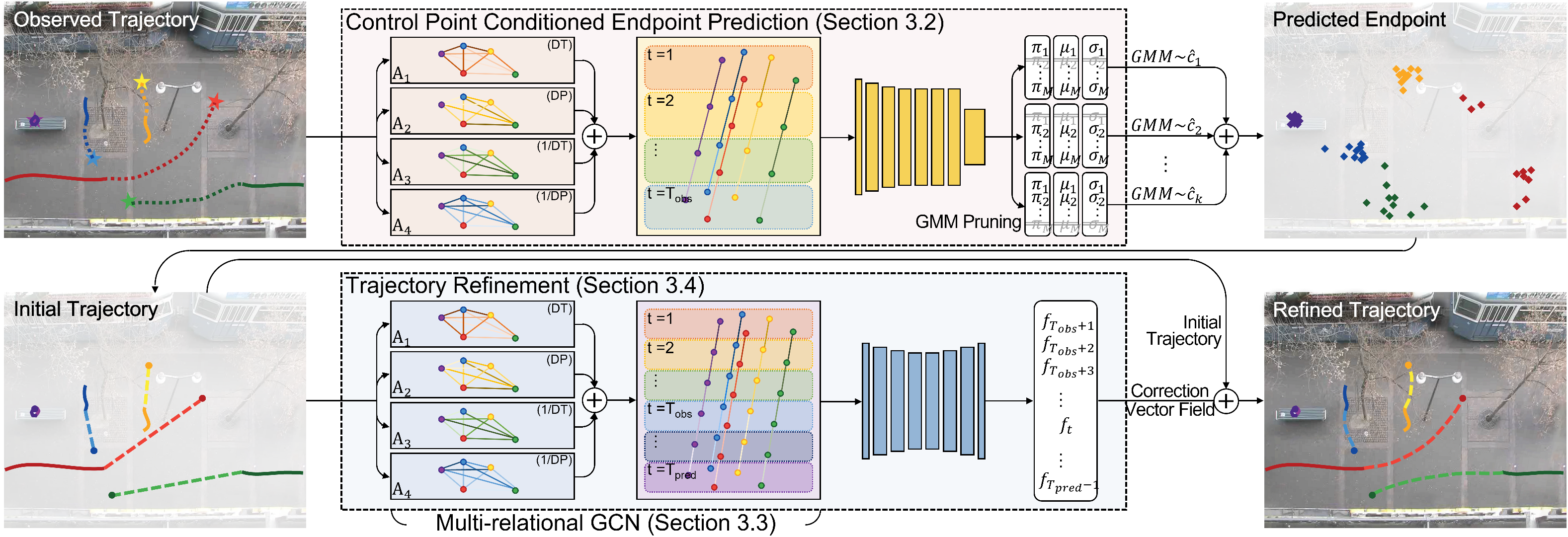

Predicting the trajectories of pedestrians in crowded conditions is an important task for applications like autonomous navigation systems. Previous studies have tackled this problem using two strategies. They (1) infer all future steps recursively, or (2) predict the potential destinations of pedestrians at once and interpolate the intermediate steps to arrive there. However, these strategies often suffer from the accumulated errors of the recursive inference, or restrictive assumptions about social relations in the intermediate path. In this paper, we present a graph convolutional network-based trajectory prediction. Firstly, we propose a control point prediction that divides the future path into three sections and infers the intermediate destinations of pedestrians to reduce the accumulated error. To do this, we construct multi-relational weighted graphs to account for their physical and complex social relations. We then introduce a trajectory refinement step based on a spatio-temporal and multi-relational graph. By considering the social interactions between neighbors, better prediction results are achievable. In experiments, the proposed network achieves state-of-the-art performance on various real-world trajectory prediction benchmarks.

Summary: A control point conditioned prediction and the initial trajectory refinement network for trajectory prediction.

Demo Video

Control Point Conditioned Endpoint Prediction

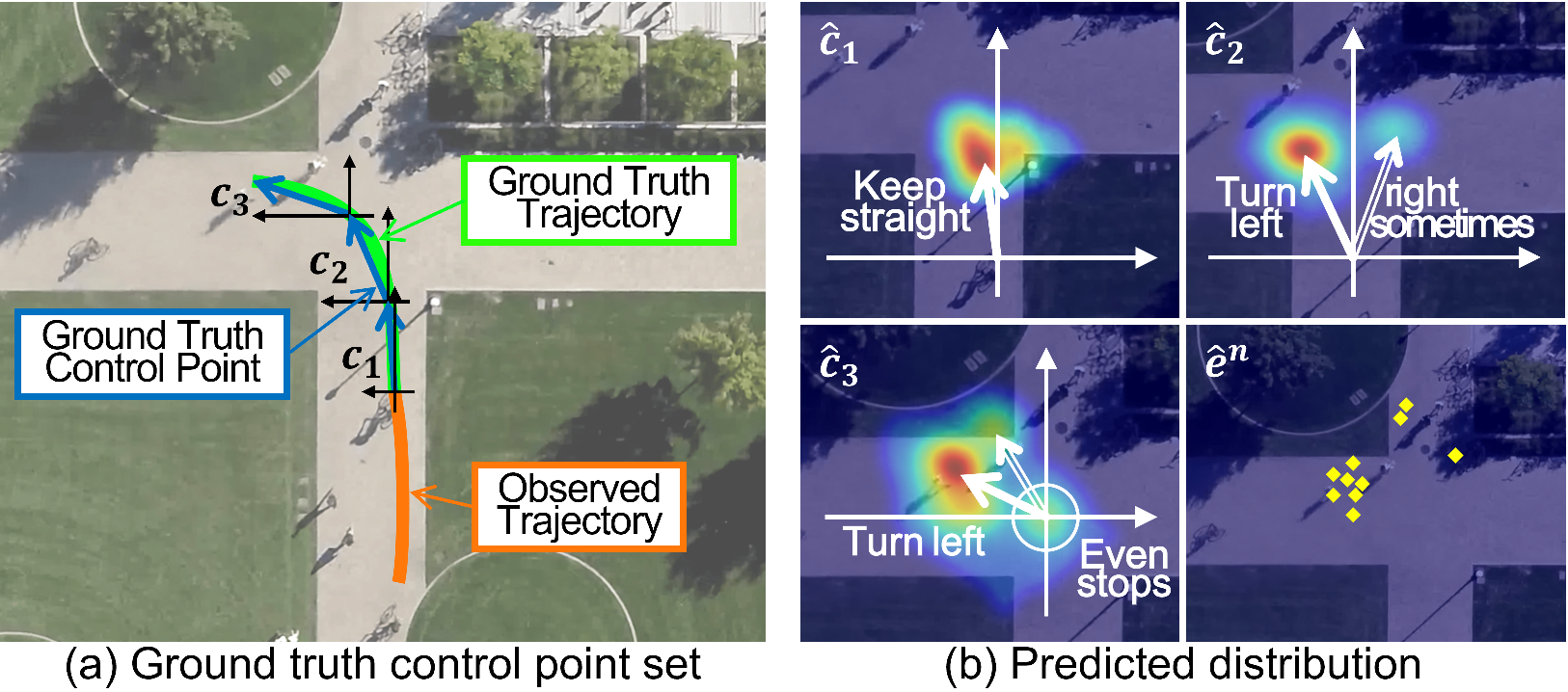

Graph Control Point Prediction

The key idea of our model is to use multiple control points when inferring potential endpoints. A novel set of control point-based endpoint predictions can handle events that unexpectedly occur in the predicted time frames. First, we define a set of control points based on a displacement in one section, which is equally divided into future sequences. Next, we present a control point prediction module. We update feature map for the observed input sequence using a multi-relational GCN, then use a multivariate Gaussian mixture model (GMM) to sample the 2D displacements of a set of control points in a mixture density network (MDN).

GMM Pruning

Public pedestrian trajectory datasets contain abnormal behaviors of agents. Since statistical models need to allocate a portion of its capacity to ensure the abnormal cases, it is left with relatively less capacity for generating realistic paths. We observe that the Gaussian distribution for abnormal behaviors is formed to have large standard deviations and low mixing coefficients. These abnormal cases are considered as out-of-distribution samples drawn far away from the training distribution statistically and lead to performance drops. To address this issue, we devise a GMM pruning that cuts off a lower half of the bivariate Gaussian based on predicted mixing coefficients. Through GMM pruning, potential control points can be assigned to effectively feasible areas without any increase in the number of learnable parameters.

Endpoint Sampling

The final endpoint is determined by using the set of control points, which is sampled through the probabilistic process. While existing works infer the final endpoint at once, our probabilistic model allows them to be determined by combining social interactions computed from each intermediate point. Because each control point represents a relative displacement from a previous point, the absolute coordinates of a final endpoint can be determined by adding all the control points to the last coordinates of the observed sequence.

Trajectory Refinement

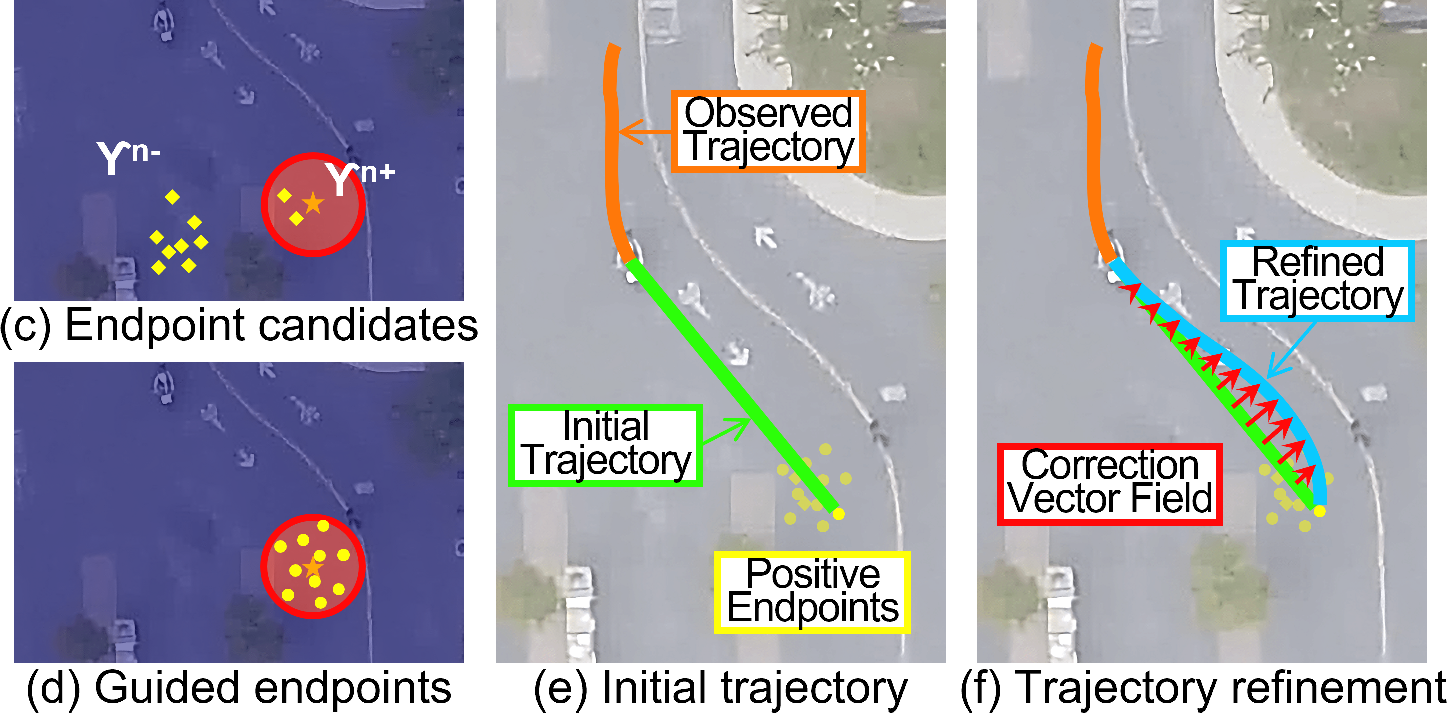

Guided Endpoint Sampling

To jointly train the control point prediction module and trajectory refinement module, we need to decouple the two modules. Since a predicted endpoint is not always close to a ground truth position, we define a rule to limit the predicted endpoints at training time. We use not only a ground-truth endpoint, but also all the predicted endpoints close to the ground truth. Specially, we divide the predicted endpoints into a positive set and a negative set. We only backpropagate gradients for the positive sets using a valid mask. During the initial training phase, the number of positive sets might be extremely small because the endpoint candidates are not yet converged. To address this issue, we additionally sample the set of positive endpoints within the range of the ground-truth, called guided endpoints.

Initial Trajectory Prediction

The purpose of establishing an initial trajectory based on the guided endpoints is to make our trajectory refinement module tractable. The simplest way to do this is to connect these control points through linear interpolation. However, we observe that the use of a set of control points in the initial trajectory prediction acts as a hard constraint, even though it is helpful to infer accurate destinations of pedestrians. Therefore, we first generate a single initial trajectory for one endpoint without any control point by linearly interpolating between the last frame of observations and the endpoint.

Graph Trajectory Refinement

As a next step, we present a novel refinement module to yield an accurate trajectory from the observed trajectory and the initial trajectory. By concatenating the two trajectories along with the time axis, we can aggregate the social interactions for all time frames using the MRGCN. The correction vector field is computed using these features, and the final refined trajectory can be obtained. Unlike existing methods which use social interactions based only on observations, our refinement module allows a more complex social relation because our MRGCN captures such relations even with the interpolated points and the endpoint.

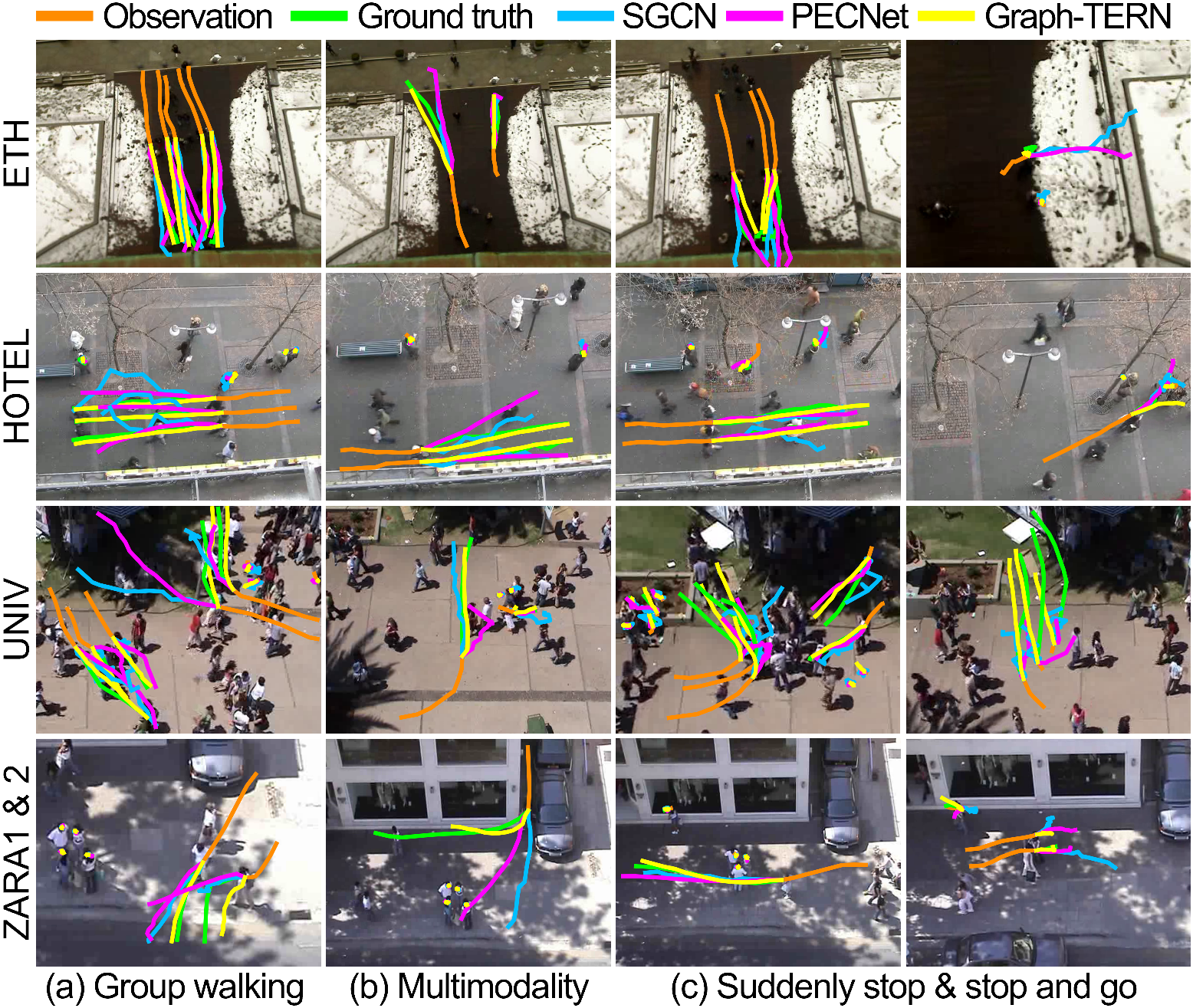

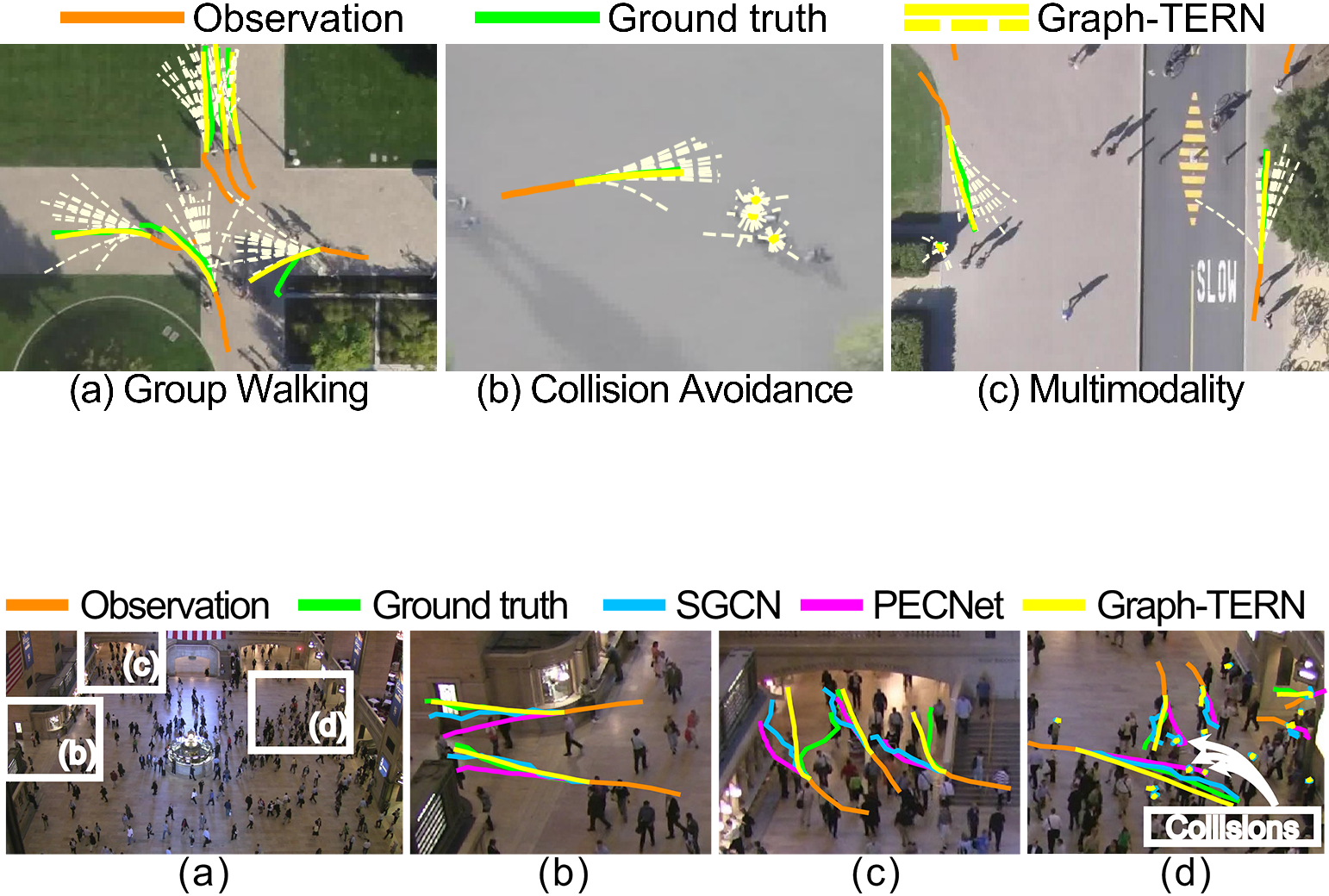

Qualitative Results

BibTeX

@article{bae2023graphtern,

title={A Set of Control Points Conditioned Pedestrian Trajectory Prediction},

author={Bae, Inhwan and Jeon, Hae-Gon},

journal={Proceedings of the AAAI Conference on Artificial Intelligence},

year={2023}

}